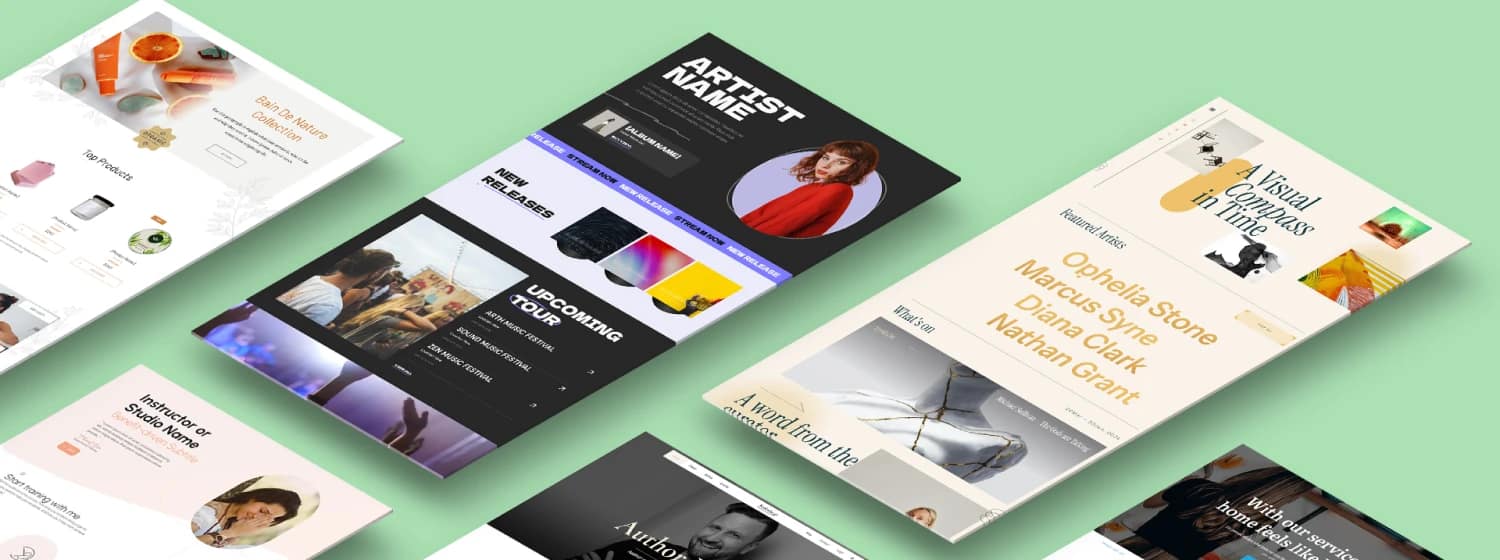

Kickstart your website with our beautiful themes & templates

400+ Templates

100% Customizable

Fully Responsive

Categories

-

All

-

Business & Marketing

-

Education

-

Fashion & Beauty

-

Fitness & Health

-

Creative

-

Travel & Lifestyle

-

Events

-

Other

Get started with your website today!

Curious? Watch How

You Can Make Our Templates Yours

Another great set of templates for Thrive Landing Pages. You guys provide some of the best pre-made landing page templates anywhere. Keep up the good work!

Sam

Thrive Architect has been a lifesaver. It took some time getting used to the plugin, but after watching a few videos, I discovered that it's a potent tool. I'm a writer with minimal experience with coding and using Thrive Architect is helping me create the website I see in my head.

Charles Minguez

Amazing. I love how the new product works. The mobile edits are money. I've tried Divi, Cornerstone, Beaver Builder, Visual Builder, and Salient and Thrive continue to come out on top as the best content builder out there. Keep up the great work!

Mark V

I thoroughly enjoy your training videos and the templates Thrive Themes provides. I always learn something new and I truly appreciate how easy you make it to achieve excellence.

J.L. Gibson

You guys are awesome...and I love the new templates!

Becky Norwood

I'm really no designer so these templates are a huge help!

Seán

I have to say, building websites has become FUN again! I am Loving Thrive Architect 🙂

Kevin B

You guys are simply awesome I am loving everything you are doing at the moment. Keep up the great work you really are making such a big difference in this field.

Jule Fuller

This is such an amazing offering. You guys are honestly the best in the business.

Renee G

You guys rock, man. So glad a non tech guy liked myself found out about Thrive Themes. I'll never go anywhere else.

Ian R

I just love your themes and am so glad I found them. As someone who is not a designer you have made it very easy to get everything up and running. Thanks for a great framework.

Shane Dolby

Start building Your Conversion Focused Website Now!