If you want to learn how to run an A/B test on WordPress, you’ve come to the right guide.

A/B testing or split testing or ab split testing are all terms that are used to describe the same thing:

You create one or more variations of an element (a page, an opt-in form, a blog title, etc.) and a tool will split the incoming traffic among the variations.

If tech isn’t your specialty, and you like to keep things simple, the thought of running an A/B test on your own might send a shiver down your spine.

Don't worry. We've got you.

Split testing your landing pages is crucial for raising your business’s bottom line – and it can be easy to do so (with the right tool).

Keep reading to learn how to run your first A/B test and start your journey to conversion optimization today.

Why Should You A/B Test Your Web Pages?

A/B testing is a must.

If you want to see real improvements in your page and form conversion rates, you need to split-test your landing pages regularly.

How else will you identify the elements that are contributing to your sales and sign-ups?

A/B testing can help create a better user experience for your site visitors and provide you with useful data to improve your page designs.

Some business owners skip A/B tests because they worry that it’s too complicated to set one up – but that doesn’t have to be the case.

There are a number of WordPress A/B testing tools that are easy to set up and use.

Google Optimize used to be the go-to resource, but with its services no longer available for this function, other viable split testing plugins have come up.

We’re about to show you how to use one of them to run your first test.

How to Run an A/B Test on Your WordPress Website(Step-By-Step)

By the end of this tutorial, you’ll be able to run an A/B test with ease.

Ready? Let’s dive in:

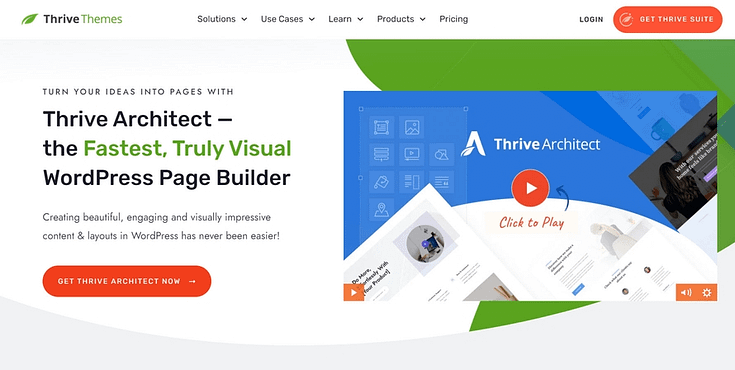

1. Download & Install Thrive Architect + Thrive Optimize

If you aren't getting the conversions you need, there's a high chance your pages need to be optimized for users to take action.

This is a good time to try out a different tool to build your pages and we recommend using Thrive Architect, one of the best WordPress page builder plugins.

If you want to show your target audience that you mean business – this is the plugin to use. This tool is compatible with most popular WordPress themes and can be used by business owners, bloggers, etc.

Design unique landing pages to promote your offers, using Thrive Architect’s drag-and-drop editor and expansive library of fully customizable page and block templates.

Landing page template sets in Thrive Architect

Pick from a variety of design elements and to upgrade your templates and put your products in the best light.

The best part? You don’t need to learn HTML, CSS, or any other coding language to create these pages.

Once you’re done, activate Thrive Optimize, our A/B testing plugin to split test your pages. You can even run a test to compare your old pages, built with your former tools, with your new, and improved Thrive Architect-built pages.

When you purchase Thrive Architect, you get immediate access to Thrive Optimize, too – at no additional cost.

You can buy Thrive Architect (and Thrive Optimize) as an individual product, or as a part of our WordPress plugin bundle, Thrive Suite.

Thrive Tip

If you want to get even deeper insights on your pages’ performance, we recommend installing a Google Analytics plugin. MonsterInsights is one of the best tools to get the job done and offers a free plugin and a premium version.

2. Launch a Page in Thrive Architect

Next, open a page in the Thrive Architect editor. You can do this in 1 of 2 ways:

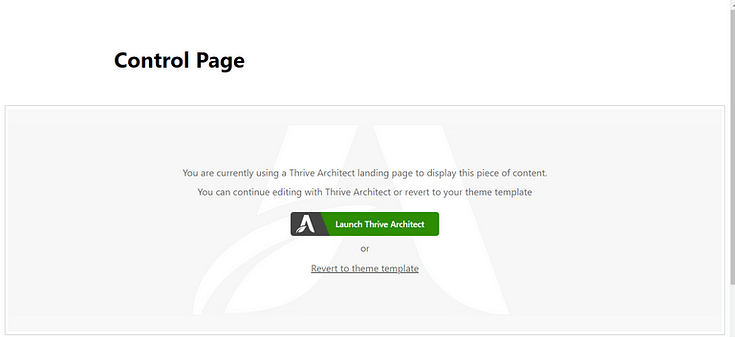

Opening an Existing Page

In the WordPress dashboard, find the page you want to test and select it.

In the WordPress Editor, you select “Launch Thrive Architect”. Your page will open in the visual editor.

This page is your “Control”. You don’t need to make any changes to this specific page.

Creating a New Page

If you want to create a new landing page to split test, follow these steps:

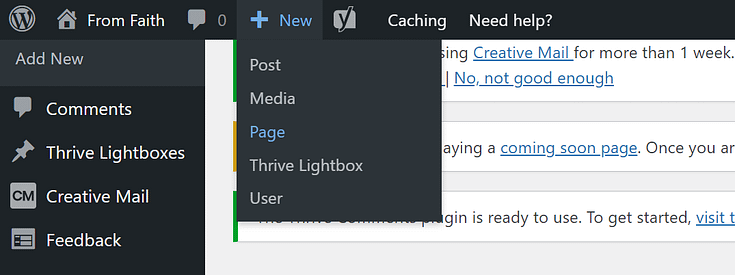

In the WordPress dashboard, click “Add New” and select “Page”.

Name your page and click the green “Launch Thrive Architect” button.

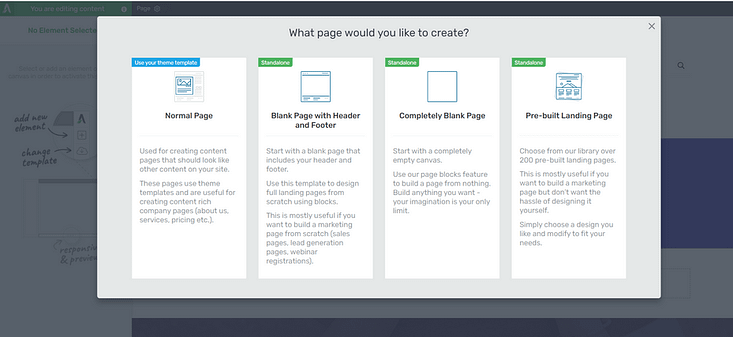

Thrive Architect will provide you with four options:

1. Normal Page

2. Blank Page with Header and Footer

3. Completely Blank Page

4. Pre-built Landing Pages

We recommend choosing the "Pre-built Landing Page" option.

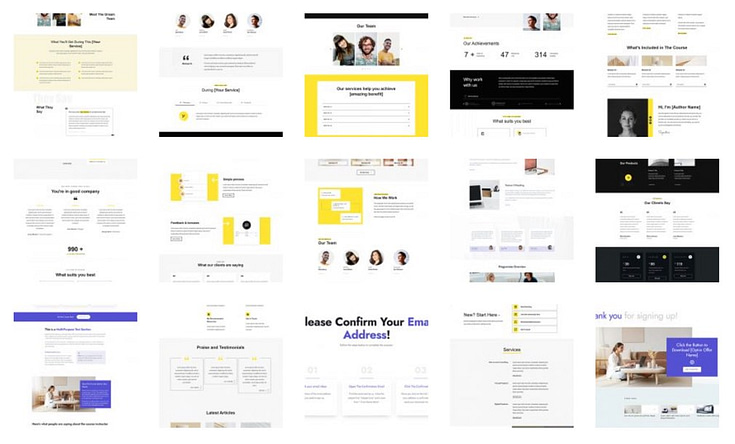

In the Landing Page Library, browse through our page templates and select the one you like most.

Landing page templates in Thrive Architect

Customize it by adding or removing new elements, replacing the placeholder text and images with your own visuals and copy. Save your landing page and get ready to create your first A/B test.

3. Create a New Test in Thrive Optimize

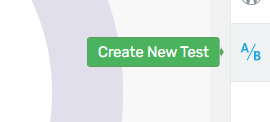

In the right hand sidebar of the Thrive Architect editor, search for the “A/B” button.

After you click it, you’ll be directed to the Thrive Optimize dashboard.

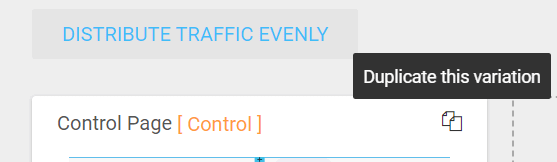

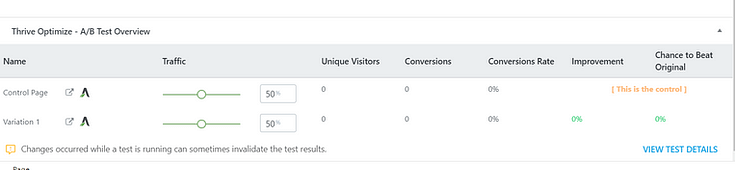

The page you started editing with Thrive Architect is your control page — as seen in the image below.

The next page you need to create and work on is your “Variation” Page.

4.Create Your Variation Page

Your “Variation” page is where you’ll make changes to test against your control page.

You can create as many variations as you want. For example, if you want run tests on 3 to 4 different versions of the same page, you can go right agead.

But for simplicity, we recommend sticking to just one for your first A/B test. Once you’ve got the hang of the process, you can start experimenting with multivariate tests.

If you want to create a page similar to your control page, and make changes to that one, you should duplicate your control page.

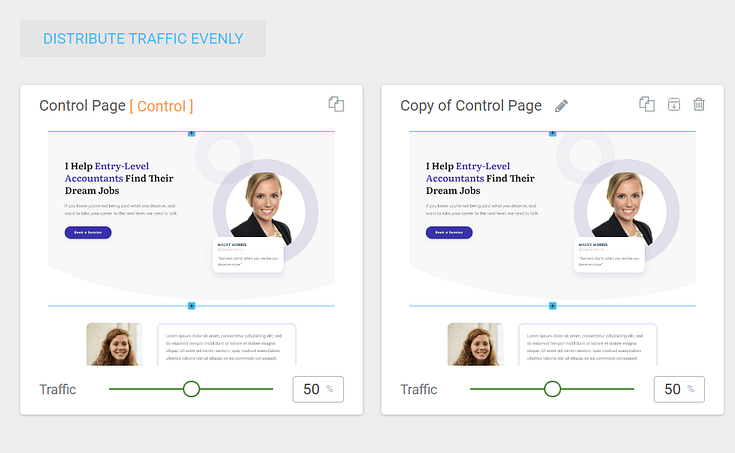

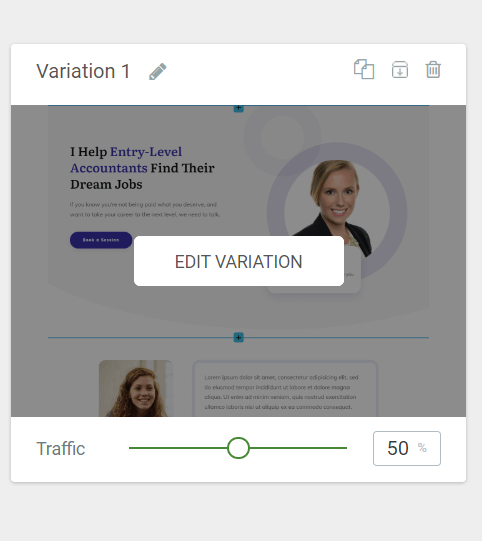

A copy of your control page will appear next to the original. Rename it and click “Edit Variation”.

You’ll be redirected to Thrive Architect, where you’ll customize your “Variation” page.

5. Edit Your Page Variation in Thrive Architect

Before you start customizing your landing page, make sure you've decided on what to test.

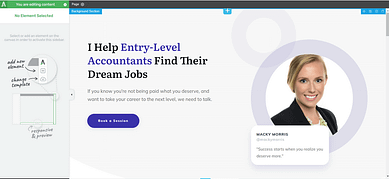

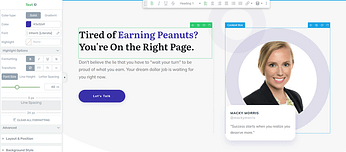

In our example below, we chose to test our page’s hero section. We changed the headline, supporting copy, and button text.

Our goal here is to see if the changes we’ve made, will lead to a higher call-to-action button click-through-rate.

In simpler terms, we want to see if the changes to our hero section’s copy will lead to more visitors clicking on the call to action button.

Control hero section

Variation hero section

After you’ve made changes to your “Variation” page, save your work and return to the Thrive Optimize dashboard.

6. Configure Your Traffic Settings

In the Thrive Optimize dashboard, you can then set up the % of traffic you want to send to each specific page.

By default, the traffic is distributed evenly, but you can use the sliders at the bottom of each cad to change the percentages.

7. Set Up Your Test

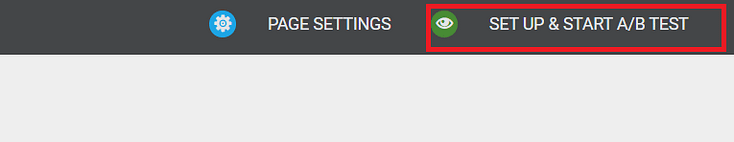

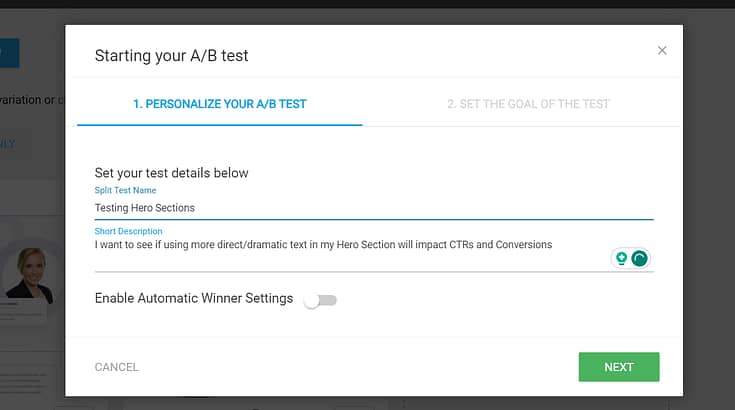

In the top right of the Thrive Optimize dashboard, select “Set Up and Start A/B Test”

Give your test a name and description.

Right under these fields, you’ll have the option to enable the “Automatic Winner Settings” for your A/B test.

This option will automatically choose the winning page after enough data is gathered.

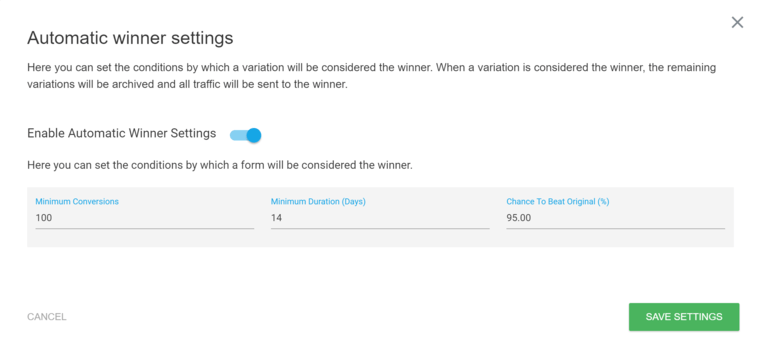

Once you’ve selected “Enable Automatic Winner Settings”, a conditions field will appear.

Set up your test and forget about it! Our tools will do the job for you.

Here, you’ll need to set a minimum:

Number of conversions to be achieved

Amount of time the test should run

Chance to beat the original landing page (this figure must be met in order for the variation to be recognized as the winner. The higher this number, the more reliable your test results will be, so we recommend keeping it at 90% or higher).

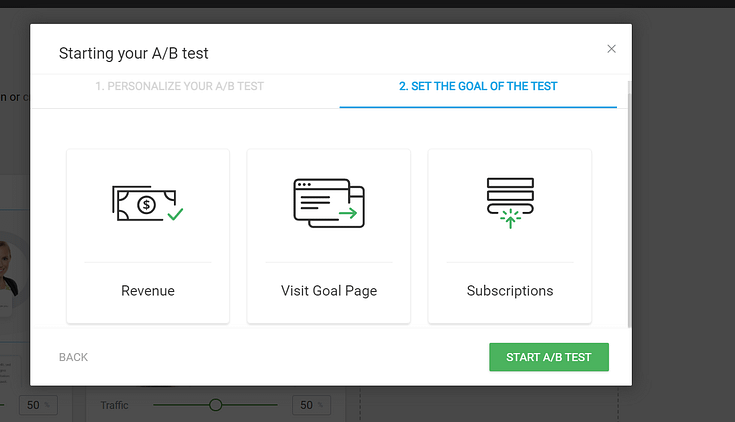

8. Set the Goal of the Test

The next screen gives you three options to choose from – Revenue, Visit Goal Page, and Subscription.

Select the goal that fits best with what your type of test, and follow the instructions that follow.

9. Start Your Test

Once you have set up the goal type, you can click "Start A/B Test".

You’ll be redirected to the WordPress Editor, where you can see an overview of your split test at the bottom of the screen.

And that’s how you set up and run your first A/B test!

What Elements Should You A/B Test?

When it comes to A/B testing landing pages on your WordPress site, you'll want to focus on testing elements that are likely to have a significant impact on user behavior and your business outcomes.

Here's a list of common elements that businesses often A/B test:

Headlines

Calls to Action ( CTA button placement, text, color, etc.)

Landing page designs (Layout, length, wording)

Custom post types

Lead-generation & Contact Forms (placement, length, double opt-in vs. single opt-in, popups)

Social proof (positioning, number of testimonials, adding or removing star ratings…)

Product descriptions or WooCommerce product pages

Pricing tables (length, pricing structure, etc.)

Checkout pages

If your original pages include elements like widgets, sidebars, carousels, etc. you can test one of these pages with a simpler variation, to identify the one that provides a more user-friendly experience for your visitors.

Control page without video

Test variation with video

You should prioritize split testing elements that directly affect your most important metrics – sales, sign-ups, or engagement.

Lastly, you don’t have to make major changes to your pages to run an effective test. Sometimes, a tweak as simple as adding testimonials to your page can lead to a 25% spike in your conversion rates— like it did for us.

Next Steps: Drive More Traffic to Your Website

An effective A/B test needs traffic. You need to ensure you’re reaching your target audience through various platforms – search engines, PPC ads, social media, and even email.

Here are 4 free tutorials to help you create an effective strategy to grow your audience and direct them to your website:

- How to Create SEO-Friendly Blog Posts Users and Bots Will Love (14 Tips)

- 8 Content Marketing Hacks to Grow Your Online Business

- 7 Keyword Research Tips for the Busy Entrepreneur

- How to Get Your Business Noticed and Grow Your Audience

Tip: If you’re happy with your current page builder and don’t want to buy a new one to use the Thrive Optimize

add-on, you can check out any of these other split testing plugins: Nelio A/B Testing, VWO, Optimizely, and Visual Website Optimizer. Most of them offer a free version for you to try out.

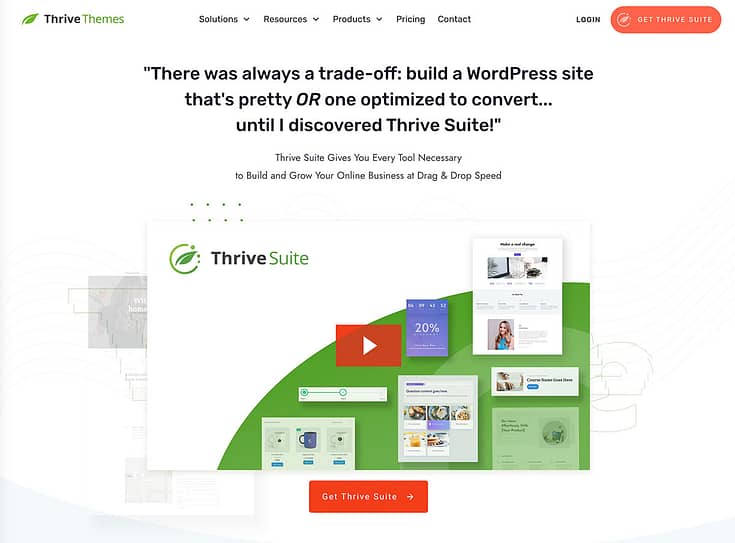

Optimize Your Site for More Conversions

A/B tests are only as effective as the quality of your website and landing pages.

If you’re failing to see conversions, there’s a high chance that your site’s design needs to change.

To create a website that is easy to navigate and looks great, you need the right tools. We recommend using Thrive Suite.

Thrive Suite is an all-in-one toolkit that contains premium plugins, landing page templates, opt-in form templates, quiz templates, and more; designed to help you create an amazing website for your business.

Whether you need a simple one-page website, a multi-page website with an eCommerce store, or a simple blog, Thrive Suite can help you build what you need.

If you've been thinking about taking your business to the next level and want to use high-quality tools for a crazy reasonable price - Thrive Suite could be for you.

Click here to learn more about Thrive Suite.