When you read case studies about a big traffic website that managed to increase email signups by some insane percentage, your brain probably thinks: "That's cool but it's impossible for me to get the same results".

That's why I'm thrilled to feature Chris in today's case study.

Chris helps his mum with her website www.rosetodd.com and he managed to increase opt-in conversions by 37% with only a few minutes of work using the A/B testing options available in Thrive Leads.

Read on to discover exactly what he did and how you can do the same on your website.

More...

What's Your Hypothesis?

When you start testing opt-in forms (or any other content for that matter) it is important to first create a hypothesis.

A good hypothesis looks something like this:

Changing (element) from X to Y will (result) due to (rationale/research)

Stating a clear hypothesis to why you think your test will bring in the results you're looking for will allow you to do tests that will teach you something about your audience.

Let's take button colors as an example, the hypothesis would read something like this:

Changing the color of the submit button from green to red will result in an increase in signups due to... people liking red more than green?

That's not a good hypothesis! Even if you would get a significant result (which is highly doubtful) you will not be able to apply this to anything else in your marketing material.

Compare this to the following hypothesis:

Changing the main benefit in the title of my opt-in offer from "more productive" to "more free time" will increase opt-in rates because my audience is more interested in improving their lifestyle than getting more work done.

If this hypothesis turns out to be true, you'll be able to change your sales page, your emails, your blog content... to speak to this exact benefit and increase overall conversions, not just signups.

From Hypothesis to Real Test

Chris also started out with a hypothesis:

Focusing on the relief of negative feelings will get people to opt-in because people want to get rid of these.

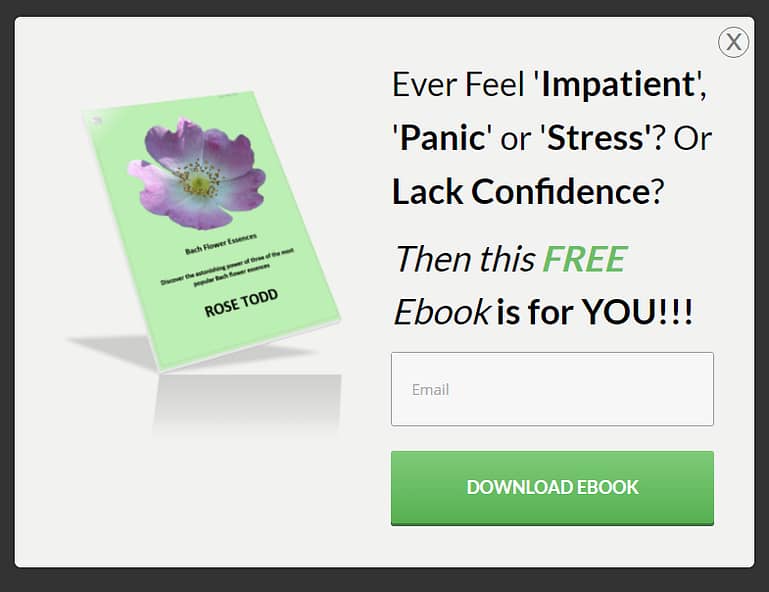

The original, control opt-in form

His testing hypothesis, that he started at the same time was:

Changing the title of the opt-in form from "feelings" to "3 powerful remedies" will boost opt-in rates because people will be curious to find out what the remedies are.

Variation opt-in form

Now there are a few things Chris did that were very smart:

- He made BIG changes. Instead of just fiddling with the text of the submit button or some other small design detail, he decided to change the value proposition for the opt-in offer. Getting your value proposition right is one of the most important things you can do for your online business. You'll be able to use the insights you learned in all of your marketing materials because you know what makes your audience tick.

- He immediately launched 2 versions. You do not have to wait to get results on the first opt-in form to start testing. You can start A/B tests from day one and boost your signups. This will accelerate your learning and your list growth!

- He kept the settings the same. You should decide what you're testing. Are you testing value propositions? Opt-in form triggers? Opt-in form types? Etc. All of these tests can be interesting, but you should choose which one you want to concentrate on and test one by one. Imagine changing the opt-in form trigger from exit-intent to showing up after 15 seconds while also changing the copy of your opt-in form. When choosing a winner, you wont know what actually made the difference in opt-in rate and you'll have to test again.

The Results

A/B testing results from the Thrive Leads dashboard. (Green = control, Blue = variation)

As you can see, Chris ran the test for a pretty long time (from August 2016 to mid-January 2017) to get significant results. And note how in the beginning, the variation opt-in form (the blue line) is actually out-performing the original one to later stabilize and under-perform consistently.

This is the perfect showcase of why you should be patient when running A/B tests.

One of the biggest mistakes people make is ending tests prematurely, which will result in false outcomes and will hurt your lead generation efforts. The problem is, there is no right answer when it comes to the perfect time frame for an A/B test.

As a guideline, we recommend:

- To wait at least two weeks. You've probably noticed that traffic on your website varies a lot from day to day. Waiting at least two weeks will give you a better base for decisions.

- If possible, wait for 100 conversions. If you can get at least 100 conversions over the span of more than two weeks, you can consider your test accurate. If you get 100 conversions before the two weeks period is over, still wait two weeks before jumping to any conclusions.

- Aim for a 95% accuracy (=chances to beat the original) minimum. If your test doesn't hit this accuracy after a certain period of time (more than 2 weeks and more than 100 conversions) your test is inconclusive.

For Chris, the winning opt-in form is converting people at a 28.77% rate and the variation at 21.02% with a 96.3% accuracy.

Now, this might not seem all that much, but this is actually an increase of 37%. For Chris it's the difference between 63 rather than 37 subscribers... Not bad for a few minutes of work!

Most of the tests you'll run will not result in a big, world-changing difference. But relentless testing will do so over time. That's exactly how we managed to increase opt-in rates by 268% for John Lee Dumas of Eofire.

What's Next?

Now that Chris tested and approved one of his hypothesis, he can start running a new test.

Because this opt-in form is running on a sales page, I would NOT change the trigger. The main goal of a sales page is to get people to buy your product. Getting them to sign-up is a secondary goal which is perfect for an exit-intent lightbox.

For the same reason, I would not test different opt-in form types against each other.

These two tests would be tests that we recommend running on just about any website because these are BIG changes (an overlay vs. a lightbox, showing a lightbox on page load vs. on exit intent, ...) but not when the form appears on a sales page.

Some things Chris could start experimenting with next are:

- Home in on the copy even more. Maybe Chris can find pain points that resonate even more with the audience. In this article you'll find question prompts to help you discover additional benefits and value propositions for your free opt-in offers in just a few minutes.

- Radically different design. Not just changing a button color but choosing a completely different opt-in form template without the ebook image or with a different ebook image.

- Test a new opt-in offer. This is a little bit more work, but maybe Chris already has some content that he could share as a new opt-in offer? To get inspiration for opt-in offers, check out this article with 13 examples of brilliantly effective opt-in offers.

- Test a multiple-choice opt-in form. Asking for a micro-commitment before asking to sign-up can be a very efficient way to boost opt-in rates and is definitely worth the try.

How Can You Apply This to Your Opt-in Form?

The very first thing I encourage you to do is to add a variation to your existing opt-in form. This will only take a few minutes! Not sure how? Here's a tutorial to guide you through it step by step.

For this variation, do not try anything fancy. Simply change the title.

If you need help improving the copy of your opt-in form, you can check out our free Thrive University course: How to Create Persuasive Opt-in Forms.

In general, make it a habit to always add a test variation to your opt-in forms. You've got nothing to loose!

And one last thing:

Don't rush!

Set up the test and be patient, even better, forget about it and let the automatic winners settings do the job for you.

Now, I would like to know:

- Are you testing your opt-in forms?

- Have you seen any results?

- Did you get stuck or are you having trouble testing?

Share your experiences in the comments below!

No idea how to run an A/B test. Any videos or tutorials?

Hi Javier,

Here is the tutorial on how to set up an A/B test in Thrive Leads.

I missed it (reread several times!) Which one did better? The original with “feelings” or the other one with “remedies?”

thx

Hi Darlene.

The “feelings” did better, but the button text is also different, which may have had some influence.

This initial test was to test two options that were quite different (so not strictly a ‘pure’ test). Next, I plan to further refine the test, including effect of button text.

Kind Regards

Chris.

The “ever feel” ad. You can see the trend and data in the chart.

Feelings did better. 28% vs 21%. See the blue/green text under the line chart…

From the screenshot it actually looks like Chris’ control with “feelings” is working better than the variant with “remedies”.

My hypothesis would be that specificity is hard to beat, and the “feelings” control is more specific. And in this case (unlike many other times I’ve tried to guess the winner before the test) my hunch seems to be right 😉

Hi Alex,

Yes specificity is often hard to beat but curiosity is also a pretty powerful one… And yeah if there is one thing I learned from running tons of tests it’s that it is impossible to predict which one will be the winner! I’ve been wrong soooo many times.

Can you be more clear about how to determine the accuracy rate? You say aim for it. And you mention Chris’s rate: “For Chris, the winning opt-in form is converting people at a 28.77% rate and the variation at 21.02% with a 96.3% accuracy.” How did you come up with the accuracy rate of 96.3%. I guess I’m missing something.

Hi Hoya,

In the Thrive Leads chart you can see a number in the “chances to beat the original” column when this number is over 95% (or under 5%) you have a 95% accuracy rate.

In this case, the number is 3.69% hence the 96.3% accuracy rate on the original form. Hope this is more clear now 🙂

To be patient with the test. Hm, I think I’d made a wrong decision. I never thought that after some few days the green and blue line changed. I don’t know the reason but I choosed the blue…

Hi Tamas,

Yes this phenomenon is something we see pretty often (the charts being all over the place in the first days). Not sure why this happens, but that’s why setting up a test and forgetting about it for at least 2 weeks will help making the right decision!

Seems to me Chris not only made a change in words from “feelings” to “remedies” – but also in the structure of the sentence themselves. The “feelings” opt-in is in the form of a question while the “remedies” is a statement. In your opinion would that influence the outcome?

Hi Andrew,

Yes that could influence the income 🙂

Hanne;

Thank you for the fine article and case study showcasing the work done by Chris Todd on his mum’s website. I wrote to compliment her on her son and the website he created with Thrive Themes. Which of the thrive Themes did Chris use to create the site?

Hi Larry,

He used Ignition on the website.

Hanne; Thank you,

Larry

Hello Hanne! I loved the item and the button and title hint. I’m doing a test today on sales page for ads like you said, thanks for the article!

Hi… Hanne.

Thank you for your suggestion about my opt in form. During the last webinar, you analyzed my opt in form in my website mythrivetutor.com . Lack of clarity in the message was your evaluation. I started A/B test with the new opt in form you suggested me. This will take at least two weeks or more in order to see any variation in the statistics . Thrive Leads plug in is fantastic and I put it in automatic winner settings. I just cloned the opt in form, I made the changes for the new one and that was all. Easy! Now, I am going to make variation in all my opts in forms, changing for a better value proposition, a better message for my audience. Thank you very much for choosing my opt in form!

Hi Juan,

So happy to hear this! Taking action and A/B testing will make a big difference! Keep us posted 🙂

I’m in the middle of doing my own A/B testing so this article has come in handy. Thank you.

I started to insert the AB test strategy for my projects.

Great to hear Carlos! Let us know how it goes

Post with very interesting tips

Thank you

Hy Hanne,

Please, tell’me, Thrive University it’s made it with Thrive themes? Is it a tutorial or something? Because I want to made a membership page and I want to made’it with Thrive!

Thank tou!

Hi Adrian,

Thrive University is custom made by our developers. So for the moment this is not possible yet to create exactly the same thing with the tools (but keep your eyes open 😉 )

Hi Hanne,

This is side question.

“Hanne knows exactly what companies have ever retargeted her (she keeps an updated file).”

How do you track the companies that are retargeting you accurately?

Are you saying the FB ads that appearing after you visited some websites?

Would love to know how did you do it.

Hi Albert,

No real system, just taking a lot of screenshots 😉

I did not do A / B tests, but after reading your article I will start using that strategy.

Perfect 🙂

love you guys

Thanks Graça 🙂

Teste A/B é essencial para conseguir ver o que está dando certo e escalar o nosso negócio! Parabéns pelo conteúdo, Hanne!

Hello Hanna, how do you do the A / B tests of e-mail titles? Is there a frequency to do the tests or do you always make each shipment?

Hi Burn,

We use Active Campaign which makes it easy to A/B test email subject lines and yes we do it for every email.

Hanne, you is the best!! Thanks!!

Thanks

Good job! I signed up for Mailchimp and I’m going to start doing A / B tests.

Great to hear!

Very interesting your article and really this A / B test point is fundamental to achieve better results, I confess that I have neglected this item

Glad this showed you the importance of testing Flores!

Hello Hanne, thanks for share this case study!

Hanne, I just loved this article and I’m going to immediately put these tips into practice. Nothing better than real experience! I love your blog!

Yey! Thanks Guilherme. Let us know how it goes

Against facts there are no arguments. And this post went straight to the point. I’m putting into practice what I learned in this article A few days ago and I’m already seeing drastic changes !!! Loveee

Happy to hear that Bruno!