A conversion optimization checklist isn’t something I thought I needed – until I hit that wall.

I remember launching a landing page I was so proud of. Sleek design, solid offer, a bold CTA button. You know, the kind of thing that screamed “big conversion win.”

Traffic came in. I refreshed my analytics.

Nothing. Crickets. A bounce rate that made me want to bounce, too.

So I did what many of us do: I tweaked. I rewrote the headline. I swapped the image. I even tested three button colors (spoiler: none of them worked). And still — no leads, no sales, just me and a growing pit of frustration.

Sound familiar?

That’s why this post exists — to help you skip the guesswork and focus on what actually improves website conversions. Not vague tips. Not wishful thinking. Just clear, tested strategies that lead to real, measurable results.

Because if you’ve been pouring energy into your site but still can’t seem to move the needle, you’re not alone. And it’s not your fault. Most of us are handed “best practices” with no context or strategy.

Let’s fix the things that are quietly sabotaging your conversions and finally turn that traffic into action.

Why You’re Not Getting the Conversions You Deserve (And What Might Be Causing It)

Let’s be honest — you’re probably doing a lot right already.

You’ve got traffic. A solid offer. Maybe even a lead magnet that took hours to create. You’re following advice, testing ideas, and showing up.

But still… conversions are flat.

If that sounds like you, you’re not alone — and you’re definitely not doing anything wrong. Most likely, you’re just running into one (or more) of these common roadblocks:

If any of those hit a little too close to home — good. That means you're about to fix the real issues – and this conversion optimization checklist is going to help.

1. Start With Strategy, Not Just Page Tweaks

Look, I love a good button redesign as much as the next conversion nerd — but tweaking your CTA before you’ve nailed the strategy is like putting sprinkles on a cake that hasn’t been baked yet.

Let’s start where the real wins live: clarity and focus.

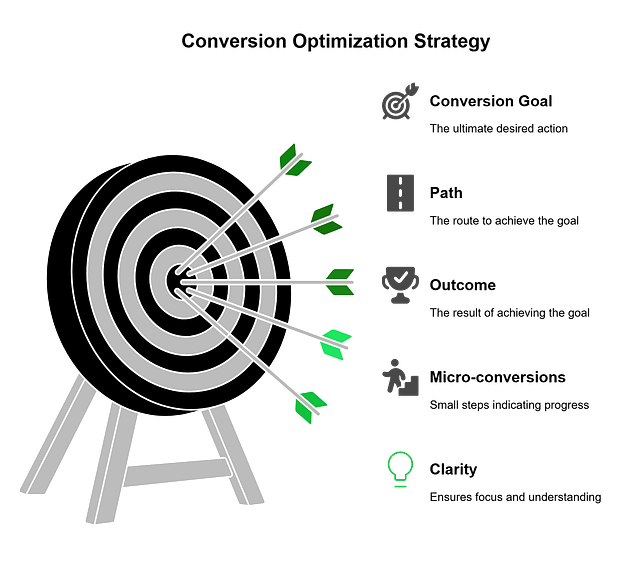

1.1 Set Clear, Conversion-Driven Goals

If your page has five CTAs pointing in different directions, you’re not optimizing — you’re confusing people.

Every page should have one goal, one path, one outcome.

Here’s how to make that happen:

Clarity converts. Confusion kills.

1.2 Know Exactly Who You’re Talking To

Trying to speak to everyone usually means connecting with… no one. (Painful but true.)

You need to know who’s landing on your page, what they care about, and what’s keeping them from saying “yes.”

When your message hits home, conversions stop being a mystery.

👇 If you’re still wondering how to write the kind of CTA that speaks directly to your audience – with the right words, tone, and placement – check out this guide next:

How to Boost Your Call-to-Action Click-Through Rates – it breaks down real examples and why they work (so you can steal the formulas for your own site).

2. Think Like a Psychologist and Ethicist

Conversion optimization isn’t about tricking people. It’s about understanding them — how they think, how they feel, and what makes them feel safe saying “yes.”

That’s where ethical persuasion comes in. You can use psychology to increase conversions without resorting to manipulation or gimmicks. In fact, that’s how you build long-term trust — the kind that turns a click into a customer, and a customer into a loyal fan.

2.1 Apply Behavioral Science (Ethically)

The human brain loves shortcuts — and smart marketers know how to align with that without being shady.

A few powerful (and ethical) tactics:

Used well, these techniques guide decision-making and reduce friction. Used poorly, they break trust fast.

Rule of thumb: If it would annoy you as a customer, don’t do it.

2.2 Build Trust With Every Element

Trust isn’t just built with words — it’s built into design, flow, and experience.

When someone feels confident on your site, conversions happen naturally.

And when they don’t? Even your best offer won’t save you.

With this lens in place, every other CRO decision you make will be stronger, more grounded, and more human.

3. Fix the Silent Conversion Killers (Tech + UX)

Here’s the thing about conversions: they don’t just happen because your copy is clever or your CTA is spicy.

Sometimes, the reason people aren’t clicking that button is because your site never gave them the chance — it loaded too slow, looked messy on mobile, or gave them zero reason to trust you.

This is the “invisible stuff” — the technical and design foundations that quietly kill conversions when they’re off… and supercharge them when they’re dialed in.

So, at this part of the conversion optimization checklist, you’re going to learn how to fix this crucial part.

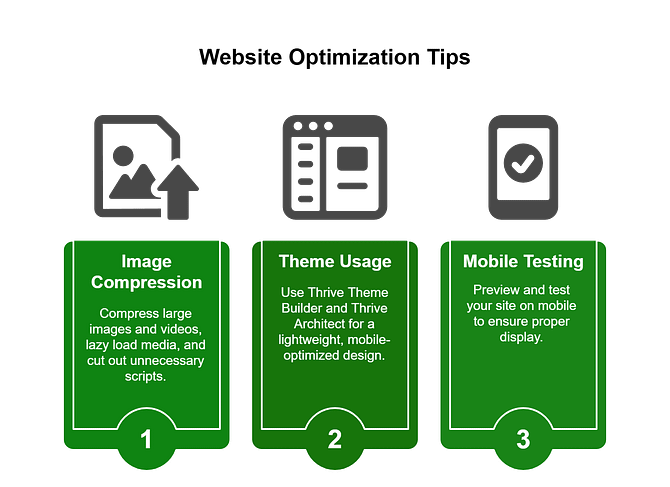

3.1 Improve Page Speed — Especially on Mobile

Let’s not sugarcoat this: if your page takes forever to load, your visitor’s already gone.

And the numbers support this too: 53% of mobile visitors abandon sites that take over 3 seconds to load.

Page speed directly impacts bounce rate, user engagement, and yep — landing page conversions. Especially on mobile, where attention spans are short and connections aren’t always great.

What you can do:

If you're trying to increase sales from traffic, this is where you start — because no one converts on a page they can’t even load.

3.2 Clean Up Navigation and Layout

According to my research, 38% of first-time visitors focus on layout and navigation. And your visitors shouldn’t need a map and compass to get through your website.

If you’re throwing links in every direction, adding popups on top of banners on top of sticky bars… you're not guiding users — you're overwhelming them.

The fix?

Remember: website optimization strategies are less about doing more… and more about doing less with purpose.

Not sure what layout works best for your site?

It breaks down proven structures by site type, so you’re not just designing pretty pages — you’re building ones that perform.

3.3 Make It Accessible and Trustworthy

Conversions happen when people feel confident. And confidence comes from two things: clarity and trust.

If someone can’t read your text, navigate your site, or tell whether you’re legit — they’re gone. Don’t believe me? Then this stat should convince you: Cluttered pages can reduce conversions by up to 95%.

So here’s what to check:

It’s not just what you include that builds trust — it’s where and when people see it. Make your site feel safe, clear, and credible, and the conversions will follow.

Accessibility + trust = more people sticking around, and more people taking action.

4. Nail Your Messaging (This Is Where Most Sites Fail)

Your site can load lightning fast, look amazing, and still flop — hard — if your messaging doesn’t land.

I’ve seen it happen too many times: a beautiful landing page with all the right visual ingredients… and copy that sounds like it was written by a committee of beige sweaters.

Here’s the deal: your messaging has one job — to make the visitor feel seen, understood, and ready to take the next step. Let’s make that happen.

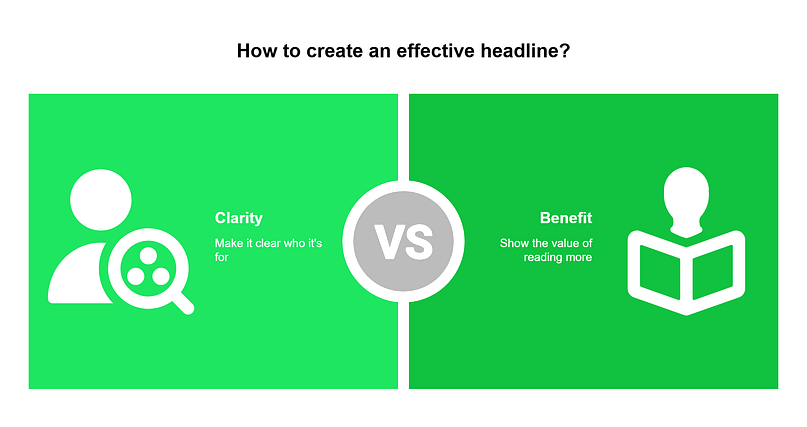

4.1 Headlines That Stop the Scroll

Your headline is your first impression. And on the internet, you’ve got about three seconds before someone scrolls, clicks away, or mentally checks out.

To stand out, your headline needs to do two things fast:

Make it clear who it’s for

Show the benefit of reading more

What to do:

If your headline is vague, clever for the sake of being clever, or doesn’t speak to a specific problem… it’s probably costing you conversions.

4.2 Use Storytelling That Leads to a Clear, Compelling CTA

People don’t buy features — they buy transformation. They buy the version of themselves that exists after they’ve worked with you, taken your course, or downloaded your freebie.

That’s why your messaging needs to do more than describe. It needs to connect.

This is one of the fastest ways to improve website conversions — make it obvious what the next step is, and make it easy to take.

✍️ Struggling to make your message land the way it should?

If your copy feels “fine” but not click-worthy, we’ve got you.

This guide to writing conversion-focused copy breaks down how to turn casual readers into committed action-takers — without sounding salesy or robotic.

5. Optimize the Full Journey, Not Just the Landing Page

A lot of people treat conversions like they happen in a single moment — click the button, get the lead, mission accomplished.

But real conversion success? It happens across the entire buyer’s journey.

Getting someone to click your CTA is a win — but it’s just the beginning. What happens next can either build trust and momentum… or cause hesitation and drop-off.

Let’s make sure your forms, popups, and proof are working together to keep the “yes” going.

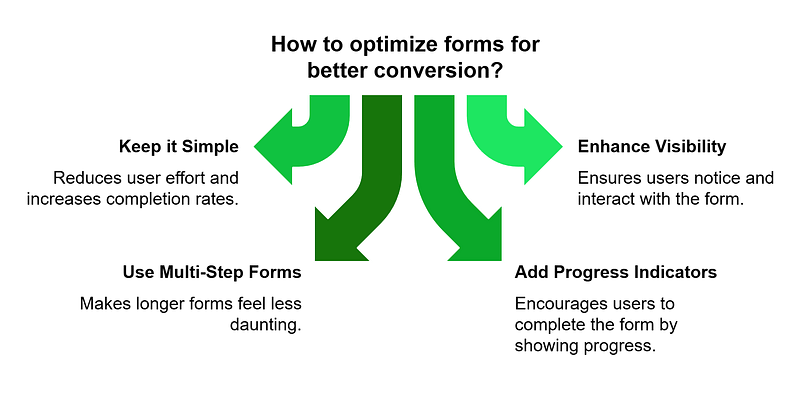

5.1 Make Forms Feel Effortless

Your form is more than a data collection tool — it’s a mini-conversion moment. If it feels like a chore, most people won’t even start.

Small improvements here can lead to big jumps in lead generation.

5.2 🧲 Use Smart Popups That React to Behavior

Popups get a bad rap — but the truth is, it’s not the popup that’s annoying, it’s the timing and relevance.

Used well, popups are your second chance to convert someone who’s almost ready.

Check out this detailed guide to learn how to use popups the right way.

6. Personalize for Real People (Not Just Segments)

Ever land on a page that makes you feel like just another number? Like it’s shouting into the void hoping someone will care?

Yeah… that’s the opposite of what we’re going for here.

The more your site feels like it’s speaking directly to the person visiting — their goals, their challenges, their context — the more likely they are to convert.

Let’s make your pages feel personal, not generic.

6.1 Use Dynamic Content Based on Behavior

When someone’s already clicked around, visited your pricing page twice, or downloaded your lead magnet, your site should respond — not keep repeating the same pitch.

That’s where personalization magic happens.

This isn’t creepy — it’s helpful. And it works. Studies show 67% of your customers expect this content too.

6.2 Recommend Products or Content Thoughtfully

No one likes the “You might also like…” section when it’s way off. (“I just bought hiking boots — why are you showing me handbags?”)

Relevance is everything.

Done well, this is one of the fastest ways to improve website conversions — by simply showing the right thing to the right person at the right time.

7. Test Relentlessly (But Smartly)

If you’re not testing, you’re just guessing. And guessing doesn’t scale.

The most successful sites aren’t run by perfectionists — they’re run by experimenters who constantly test, learn, and improve. 77% of companies are running A/B tests on their website – don’t be left behind.

7.1 A/B Test the Stuff That Matters

Not every test is worth your time. Some things (like “blue vs green”) won’t change a thing. But others? Total game-changers.

Start by testing:

Use Thrive Optimize to run visual A/B tests right inside your site — no tech headaches, no extra tools.

These kinds of tests are at the core of A/B testing best practices, and they’re where the biggest wins usually come from.

7.2 Go Beyond the Obvious Test

Once you’ve nailed the basics, it’s time to experiment.

Think outside the box with tests like:

Sometimes the biggest improvement comes from taking things away — not adding more.

7.3 Use Heatmaps to Back Your Tests With Behavior Data

Want to know where people are actually clicking? Scrolling? Rage-quitting?

That’s where heatmap analysis and session recordings come in.

Heatmaps show you where users click, scroll, and stop paying attention — like a visual report card for your page. Pair that with session recordings (using tools like Hotjar or Microsoft Clarity), and you’ll get real insight into what’s working… and what’s quietly failing.

Combine these tools with Thrive Architect to make fast layout adjustments based on what users are actually doing — not what you think they’re doing.

8. Optimize What Happens After the Conversion

The moment someone fills out your form or clicks “Buy” isn’t the end of the story — it’s the beginning of a new one.

But most websites treat the thank you page like an afterthought. (“Thanks. Bye.”) That’s a missed opportunity. So, welcome to another key part of your conversion rate optimization checklist: what happens after.

Post-conversion is prime real estate. It’s where trust deepens, value compounds, and new offers feel natural — not pushy.

8.1 Thank You Pages With a Purpose

Don’t let your thank you page be a dead end. It should feel like the next step — not a goodbye.

Here’s how to make it work harder:

Small upgrades here = big boosts in engagement.

8.2 Nurture With Automation That Feels Human

No one likes being dropped into a cold, robotic email funnel that sounds like it was written by AI with commitment issues.

Nurturing should feel personal — like a conversation that continues naturally after the first yes.

This is how you build relationships — and relationships turn leads into loyal customers.

Whether you're selling products, courses, or services — your follow-up is part of your funnel. Make it feel human. Make it feel intentional. And your conversions won’t just stick — they’ll scale.

9. Build for Retention, Not Just Acquisition

Getting the first conversion feels great — but real business growth comes from what happens after that.

Repeat customers, loyal subscribers, raving fans? That’s where the magic (and the margin) really is.

9.1 Loyalty, Referrals, and Repeats

Retention isn’t passive — it’s something you build into your funnel just like everything else.

Simple shifts like these keep your audience engaged — and increase sales from traffic you’ve already earned.

9.2 Identify + Prioritize Your Most Valuable People

Not all leads are the same. Some people open every email, click every offer, and come back to buy from you again and again.

These are your high-value customers — the ones who bring in the most income over time.

💡 This is called LTV — or lifetime value. It’s just a way of measuring how valuable a customer is based on how much they’re likely to spend with you over the long run.

Here’s how to find and take care of them:

Focus on the people who already love what you do — and they’ll keep converting again and again.

📊 Pro tip: If you’re not already tracking what users do after they convert, now’s the time.

MonsterInsights gives you an easy, WordPress-friendly way to monitor behavior, spot drop-offs, and identify your most valuable segments — so you can keep them coming back.

10. Review, Iterate, and Scale

This is where the pros live.

The secret? Don’t just launch your page and walk away. Check what’s working, improve what’s not, and keep testing.

10.1 Start Tracking What’s Really Working

Guessing is fine in the beginning — but if you want to get more sales or signups from your website, you need to know what’s actually working… and what’s not.

Here’s how to start keeping track in a simple, useful way:

10.2 Customize for Business Type (eCom, SaaS, Lead Gen)

There’s no one-size-fits-all in CRO. What works for a coaching site won’t necessarily fly for a Shopify store. The key? Tailor your approach to your business model.

You don’t need to do it all at once. But if you regularly review your results and make smart updates, you’ll get better conversions without guessing.

Conversion isn’t a finish line — it’s a feedback loop. So look at your data. Test smarter. And when you find something that works?

Scale it like your business depends on it. Because it kinda does.

Bonus: 5 Out-of-the-Box CRO Experiments to Try

Once you’ve nailed the basics, it’s time to get creative. These aren’t your usual “test button colors” tips — they’re clever, high-impact ideas that make your site feel smarter and more human.

- 1Use a quiz instead of a lead magnet → Segment and personalize from the very first click. (Try it with Thrive Quiz Builder.)

- 2Trigger offers based on page behavior → E.g. Show a pop-up at a certain point on the page or when someone is about to leave (learn more here)

- 3Embed a short “Welcome Back” video → Make returning visitors feel seen and invited to take the next step. (New to creating video landing pages? Check out this tutorial)

- 4Swap static lead magnets for 5-minute action challenges → Deliver instant wins, not just downloads.

- 5Pre-sell with a mini story page → Warm up cold traffic with a problem → solution flow before the main offer.

Try one. Test it. See what happens. These little shifts often lead to big breakthroughs.

Wrapping Up This Conversion Optimization Checklist

If you’ve made it this far — you’re not just casually interested in conversions.

You’re committed. And that’s what separates “meh” websites from ones that actually grow your business.

Here’s the truth: you don’t need to do everything at once.

Pick one section of this checklist — maybe it’s simplifying your form, fixing your layout, or finally testing that headline — and take action this week.

Then come back. Do another.

Because conversion optimization isn’t a one-time task — it’s a habit. And every improvement stacks on the last.

🚀 If you’re not using Thrive Suite yet, this is your invitation. Everything in this checklist becomes easier when your tools are actually built to help you convert — not slow you down.

From landing pages and lead forms to quizzes, A/B testing, and behavior-based popups, Thrive Suite gives you the full toolkit to build smarter, test faster, and grow on purpose.

👉Get Thrive Suite today.

✅ Ready to revisit the checklist? Scroll back up and start where you left off — or bookmark this for your next round of updates.

You’ve got this. Let’s go. 💪